Portfolio

Here is just a small sample of some of the fun projects I've worked on. More on GitHub and on my blog Count Bayesie.

A Damn Fine Stable Diffusion Book

My most recent book with Manning Publications. Focuses on show you how you can use open models to create incredible Generative AI images using Open Source tools. Avaible for purchase for early access now, expected print publication Q2 2026.

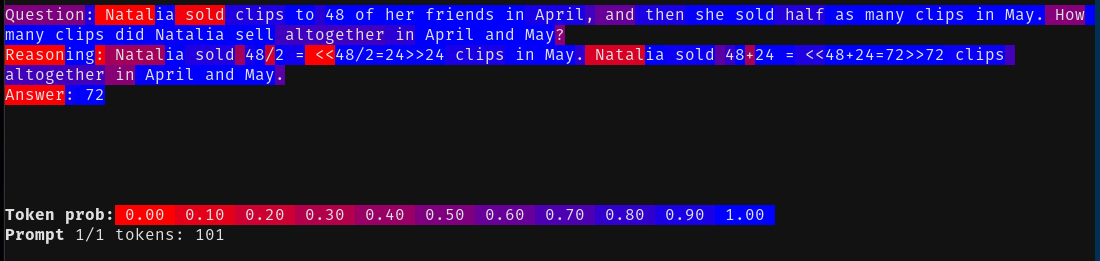

Token Explorer

In a easy to use interface for interactively exploring the sampling process of an LLM. Manually step through how an LLM (defaults to Qwen/Qwen2.5-0.5B) generates text, monitoring properties of your inprogress generation such as probability of tokens and entropy of tokens. Fun tool for understanding exactly how LLMs work during generation.

Deeplearning.AI: Getting Structured LLM Output

A project I led at .txt working with Andrew Ng and his team at Deeplearning.ai to create a course teaching people how to get reliable output from their LLM. The course gives an indepth overview of how to get predictable structure out of an LLM covering libraries like Outlines and Instructure and going into the details of constrained decoding.

The Bunny B1: Powered by SmolLM2

A project using structured generation to build tool use from scratching in support of HuggingFace's release of the Smol-LM2 model. The demo shows how natural language inputs can be automatically transformed into tool/function calls, working well even with an extremely small model.

Coalesence

An article that Andrej Karpathy described as "Very cool", detailing how structure generation makes it possible to skip unnecessary step in LLM inference. This technique, refered to as "Coalesence", allows for 2-5x speed ups in LLM inference.

Improving Prompt Consistency with Structured Generations

Collaborative research project with HuggingFace's Leaderboards and Evals research team showing that structured generation allows more consistent results across evaluations as well as better results with fewer examples.

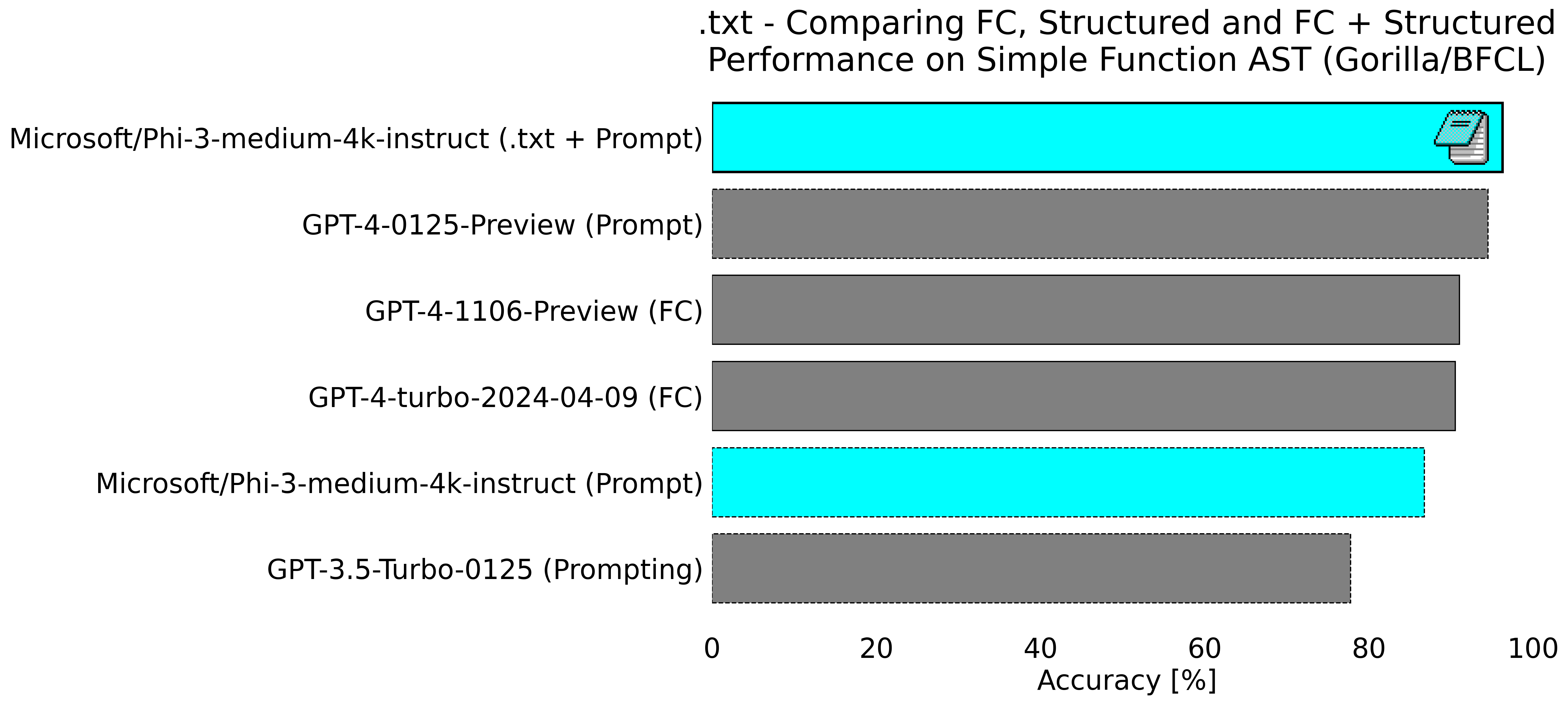

Beating GPT-4 with Open Source

Demonstrating that, with open source tools, small open weights models could compete with proprietary LLMs, like GPT-4, in standard function calling (aka "tool use") tasks as measured by the Berkley Function Calling Leaderboard.

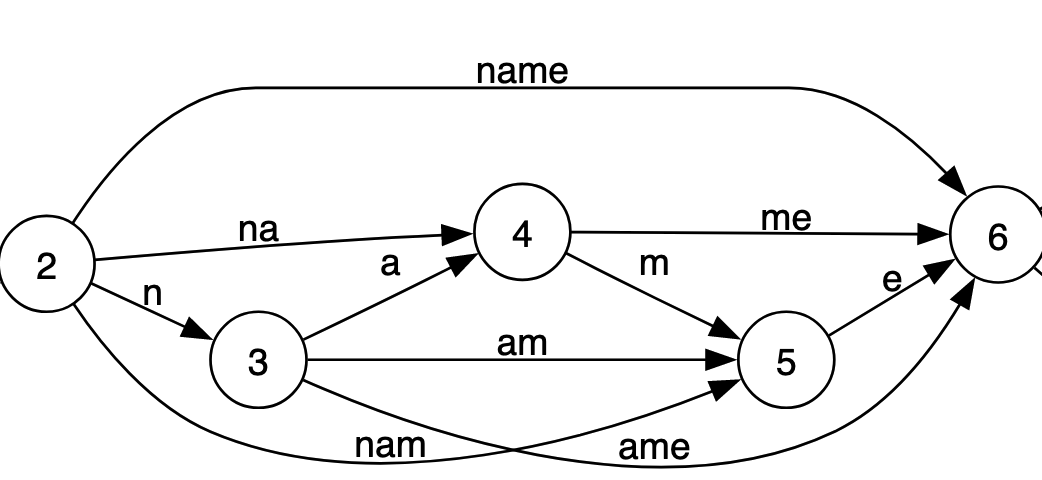

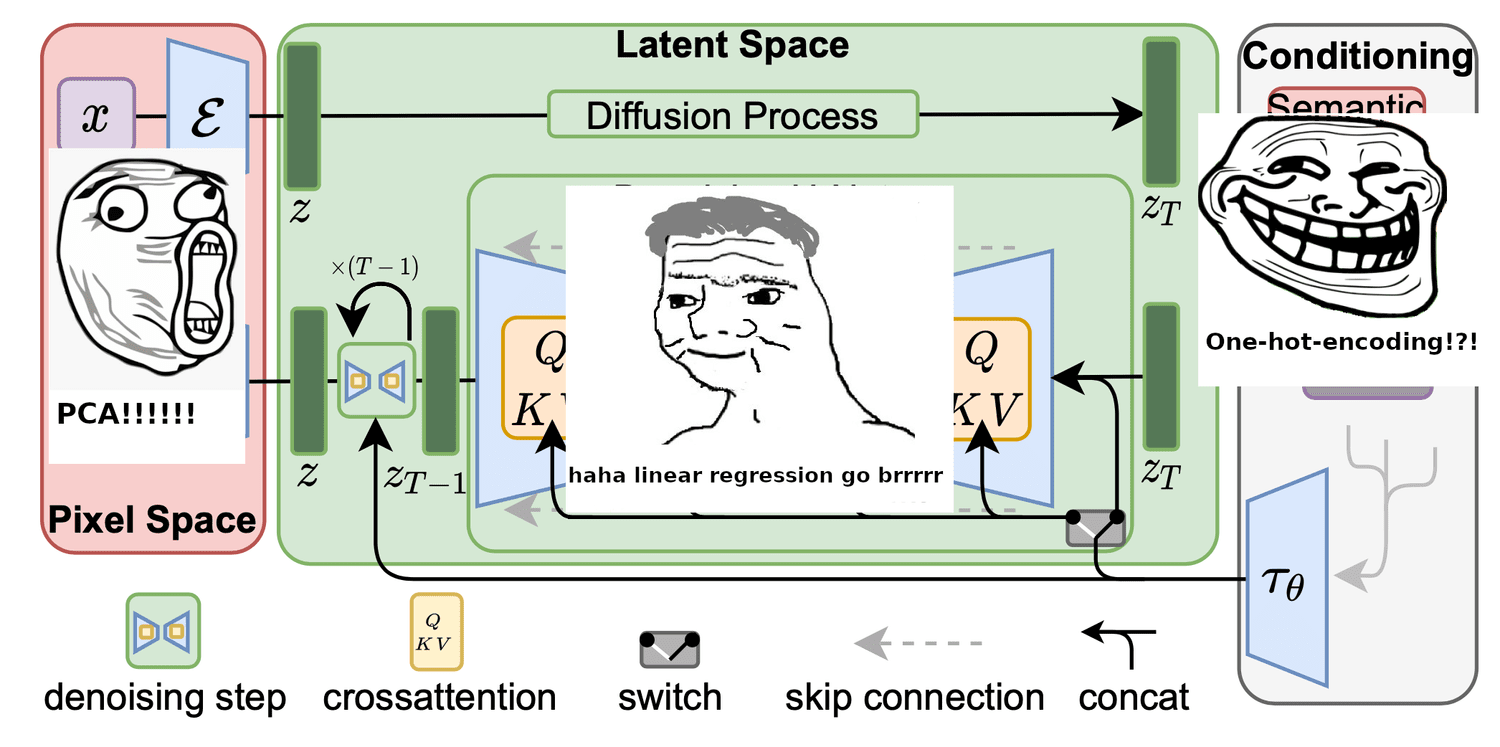

Linear Diffusion

An implementation of a diffusion model for generative AI using only linear models as the building blocks. Both an interesting experiment and a helpful guide for understanding the basic architecture of diffusion models.

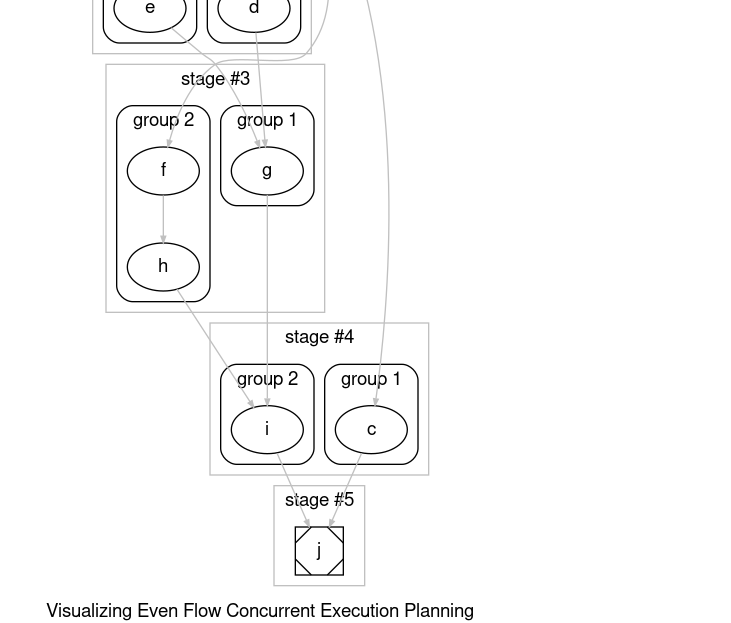

Evenflow

An experimental library in creating self-organizaing systems of agents. The python library makes it easy to describe a computational DAG which can self-organize and adapt to changes. In progress work explores automating concurrent execution.

Bayesian Statistics the Fun Way

A beginner friendly introduction to Bayesian Statistics. Over 60,000 copies sold worldwide, translated into at least 5 languages, and frequently in the top 20 for the "Data Mining" and "Probability & Statistics" categories on Amazon.

Get Programming with Haskell

An intermediate book helping experienced programmers understand the powerful of functional programming with Haskell. The book introduces fundamental concepts in function programming and Haskell's advanced type system.